Teach you to use Python as LDA theme extraction and visualization

tags: python

Foreword

The high -speed development of the information age allows us to use mobile phones, computers and other devices to easily obtain information from the Internet. However, this seems to be a double -edged sword. While we get a lot of information, we may not have much time to read them one by one, so that the phenomenon of "collection never stops and learning never starts" is common.

This article is estimated that it will also eat ash in the collection clip in the future!

In order to efficiently handle the huge document information, during the process of learning, I came into contact with the LDA theme extraction method. After learning, I found that it is particularly interesting. Its main function is

can classify many documents on themes, while displaying the theme

When I found this function, I started to think about it. For example, I can achieve a few fun things based on this function.

- Analyze the articles on the large V on the writing platform to extract and visualize the theme of the works published, so as to find a more popular theme or the theme that is easier to fire on the platform, so as to have a certain guiding significance for your writing.

- Artificially select a large number of articles containing junk advertising, then train the LDA model, extract its theme, and then use the trained LDA model to make the topic probability distribution of the topic probability of the theme of the articles. The article is removed, of course, you can also extract the topic you are most interested in.

The above two points are the ideas that individuals start according to the needs. Of course, these two ideas have been preliminary verification, and there is indeed some implementation possibilities.

In this article, I will teach you how to use Python based on Python, use LDA to draw and visualize the theme of the documentation. In order to let you read it interested, I will attach the effect of visualization first.

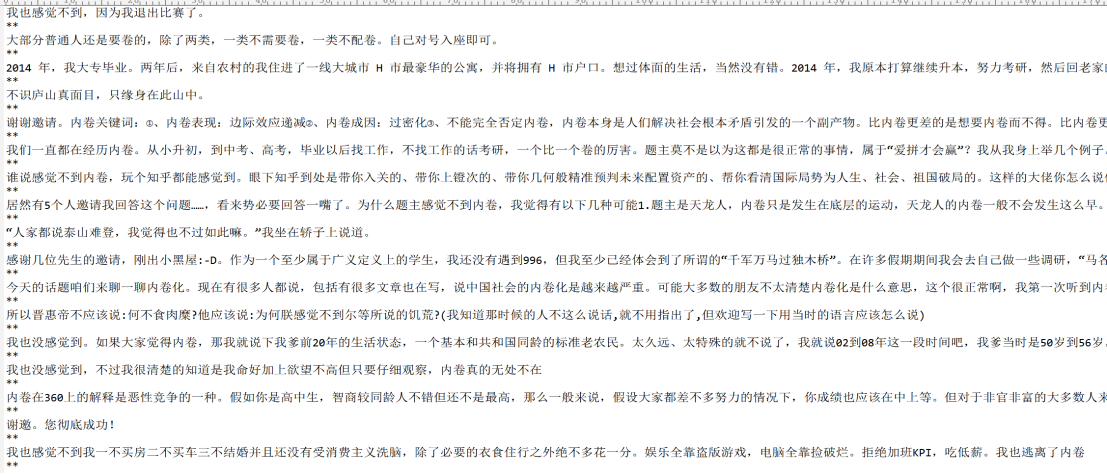

The picture above is the result of the 218 answers to a million powder big V of Zhihu. The result of the LDA theme was drawn and visually visualized. You can see that the theme she answered is very concentrated.

If you are interested after watching the visual effect, then you can start reading down. Of course Running code.

before the start

- Python version requirements

Python 3.7 or above

2. Library that needs to be installed

tqdm

requests

retry

jieba

multitasking

pandas

pyLDAvis

bs4

sklearn

numpy

openpyxl

xlrd

The installation method of the library is: open the CMD (command prompt or other terminal tools), enter the following code

pip install tqdm requests multitasking retry bs4 pandas pyLDAvis sklearn numpy jieba xlrd openpyxlAfter entering, press Enter key to execute the code, wait for the SuccessFully to appear

Preparation

Text converter

Computers cannot directly understand the text we usually use, and it can only deal with digital. In order to make it understand the text we provided smoothly, we need to convert a series of in our text, such as marking the words in the text to form a mapping of numbers and words.

The LDA theme extraction is based on statistical science. To this end, we can consider that the words of the documentation are marked, and at the same time, it counts the frequency of the corresponding words, and constructs a two -dimensional word frequency matrix in turn. That is actually not enough. But it doesn't matter, I will bring you the following example to understand what this process is.

Suppose there are so many texts

The weather is very good today

The weather is really goodWe can find that there are a few words in these two texts (do not consider a single word)

today

weather

really

very goodIf we count the frequency of each word in each document, then we can make these data into such tables

How to explain this? Please look at the second line of the table above. If it is represented by a list in Python, then it is like this

[1,1,1,0]It corresponds to

The weather is very good todaySuch a sentence. Of course, this will inevitably lose some information related to sequences, but when a single document is rich enough, the loss of this information is OK.

So how do we construct such a table to digitize each document digitize?

Here I have to introduce a good tool provided by Sklearn to us.

from sklearn.feature_extraction.text import CountVectorizer

import pandas as pd

#

documNets = ['The weather is very good today', 'The weather is really good today']

count_vectorizer = CountVectorizer()

# Frequency matrix

cv = count_vectorizer.fit_transform(documnets)

#

feature_names = count_vectorizer.get_feature_names()

# Frequency matrix

matrix = cv.toarray()

df = pd.DataFrame(matrix,columns=feature_names)

print(df)Code operation output

The weather is very good today

0 1 1 1 0

1 1 1 1 1Note that each line has 5 numbers, but the latter four are frequent words. The first number is the label of the document (starting from 0)

If you are running in the jober, then it will be like this

The above only rely on word frequency to construct the matrix, so it is obviously unreasonable because some common words are definitely high, but it cannot reflect the importance of a word.

To this end, we introduced the TF-IDF to construct the frequency matrix that can describe the importance of words. The specific principles of TF-IDF will not be introduced. This article will not be introduced.

The Python implementation code of the TF -DF constructor frequency matrix is as follows

from sklearn.feature_extraction.text import TfidfVectorizer

import pandas as pd

#

documNets = ['The weather is very good today', 'The weather is really good today']

tf_idf_vectorizer = TfidfVectorizer()

# Frequency matrix

tf_idf = tf_idf_vectorizer.fit_transform(documnets)

#

feature_names = tf_idf_vectorizer.get_feature_names()

# Frequency matrix

matrix = tf_idf.toarray()

df = pd.DataFrame(matrix,columns=feature_names)Code operation output

The weather is very good today

0 0.577350 0.577350 0.577350 0.000000

1 0.448321 0.448321 0.448321 0.630099If you are running in the jober, the result you get should be like this

Text word

If you observe the two short texts I posted before, you will find that there is always a space between the words in those texts.

But for Chinese documents, it is almost impossible for you to distinguish it with spaces.

So why do I provide such text? Because Sklearn's word -frequency matrix constructor defaults to identify the document as an English mode. If you have learned English, it is not difficult to find that words are usually separated by spaces.

But our original Chinese documents cannot do this. To this end, we need to divide the Chinese documents in advance, and then stitch these words with spaces to eventually form sentences like English.

When it comes to words, we can consider the more famous Chinese segmentation tools. For example

The weather today is very goodPython sample code of segmentation is as follows

import jieba

# Sentence

Sentence = 'The weather today is very good'

#

words = jieba.lcut(sentence)

# Output word list

print(words)

# Use space splicing and output

print(" ".join(words))Code operation output is as follows

['Today', '', 'weather', 'very', 'good']

The weather today is very goodIt can be seen that we have realized the function of Chinese sentences to English format sentences!

Of course, there are usually more punctuation symbols in the document. These are not of great significance to us, so we can uniform these symbols into spaces before we perform a word. I will not demonstrate this process first.

The subsequent code will be implemented in this process (the main principle is to use the replacement function of regular expressions)

LDA theme extraction based on TF-IDF

Before the theme was drawn, we obviously need to prepare a certain amount of documents. In the previous article, I wrote a program to get the answering text data of Zhihu respondent. If you open it with Excel, you will find it as long (CSV file)

In order to allow you to get it better, I will attach its download link again. You only need to open it with a computer browser to start downloading (if there is no automatic download, you can press the computer shortcut Ctrl S for download. Remember, it is best to put the downloaded file in the same class of code!)

Pure text link

https://raw.staticdn.net/Micro-sheep/Share/main/zhihu/answers.csvYou can click the link

Because it is a CSV file, and the programming language we use is Python, so we can consider using the Pandas library to operate it to read its Python instance code as follows

import pandas as pd

import os

# The URL below is a remote link of the CSV file. If you miss this file, you need to open this link with a browser

# Download it, then put it in the code running command, and the file name should be consistent with the following csv_path

url = 'https://raw.githubusercontents.com/Micro-sheep/Share/main/zhihu/answers.csv'

csv_path = 'answers.csv'

if not os.path.exists(csv_path):

Print (F'Please open {url} with the browser and download the file (if there is no automatic download, you can press the keyboard shortcut ctrl s to start the download in the browser.

os.exit()

df = pd.read_csv(csv_path)

print(list(df.columns))The running output is as follows

['Author Name', 'Author ID', 'Author token', 'Answer the number of likes',' Answer time ',' Update Time ',' Answer ID ',' Question ID ',' Question content ',' Answer content']I exported the name.

If you are using Jupyter, what you see should be like this (I chose to check the two columns above)

There are many columns in this CSV file. Of course, we mainly pay attention to the content of the answer. It is the document collection we choose. For this file, we will perform the following operations

Delete air value, repetition, removing meaningless punctuation symbols, segmentation and stitching into English sentence format.

The specific Python code is as follows

import pandas as pd

import os

import re

import jieba

# The URL below is a remote link of the CSV file. If you miss this file, you need to open this link with a browser

# Download it, then put it in the code running command, and the file name should be consistent with the following csv_path

url = 'https://raw.githubusercontents.com/Micro-sheep/Share/main/zhihu/answers.csv'

# Local CSV documentation path

csv_path = 'answers.csv'

# Column in the CSV file to be split

document_column_name = 'Answer content'

pattern = u'[\\s\\d,.<>/?:;\'\"[\\]{}()\\|~!\t"@#$%^&*\\-_=+a-zA-Z,。\n《》、?:;“”‘’{}【】()…¥!—┄-]+'

if not os.path.exists(csv_path):

Print (F'Please open {url} with the browser and download the file (if there is no automatic download, you can press the keyboard shortcut ctrl s to start the download in the browser.

os.exit()

df = pd.read_csv(

csv_path,

encoding='utf-8-sig')

df = df.drop_duplicates()

df = df.dropna()

df = df.rename(columns={

document_column_name: 'text'

})

df['cut'] = df['text'].apply(lambda x: str(x))

df['cut'] = df['cut'].apply(lambda x: re.sub(pattern, ' ', x))

df['cut'] = df['cut'].apply(lambda x: " ".join(jieba.lcut(x)))

print(df['cut'])Of course, these a few operations may be a bit long, so if you like high force, I can also provide you with a higher code below

import pandas as pd

import os

import re

import jieba

# The URL below is a remote link of the CSV file. If you miss this file, you need to open this link with a browser

# Download it, then put it in the code running command, and the file name should be consistent with the following csv_path

url = 'https://raw.githubusercontents.com/Micro-sheep/Share/main/zhihu/answers.csv'

# Local CSV documentation path

csv_path = 'answers.csv'

# Column in the CSV file to be split

document_column_name = 'Answer content'

pattern = u'[\\s\\d,.<>/?:;\'\"[\\]{}()\\|~!\t"@#$%^&*\\-_=+a-zA-Z,。\n《》、?:;“”‘’{}【】()…¥!—┄-]+'

if not os.path.exists(csv_path):

Print (F'Please open {url} with the browser and download the file (if there is no automatic download, you can press the keyboard shortcut ctrl s to start the download in the browser.

os.exit()

df = (

pd.read_csv(

csv_path,

encoding='utf-8-sig')

.drop_duplicates()

.dropna()

.rename(columns={

document_column_name: 'text'

}))

#

df['cut'] = (

df['text']

.apply(lambda x: str(x))

.apply(lambda x: re.sub(pattern, ' ', x))

.apply(lambda x: " ".join(jieba.lcut(x)))

)

print(df['cut'])After running, we will add a column of processed text to the original form.

Next, we will use the knowledge of this processing document to construct the TF-idf matrix with the knowledge written before. The python sample code is as follows (the key is the last few lines)

import pandas as pd

import os

import re

import jieba

from sklearn.feature_extraction.text import TfidfVectorizer

# The URL below is a remote link of the CSV file. If you miss this file, you need to open this link with a browser

# Download it, then put it in the code running command, and the file name should be consistent with the following csv_path

url = 'https://raw.githubusercontents.com/Micro-sheep/Share/main/zhihu/answers.csv'

# Local CSV documentation path

csv_path = 'answers.csv'

# Column in the CSV file to be split

document_column_name = 'Answer content'

pattern = u'[\\s\\d,.<>/?:;\'\"[\\]{}()\\|~!\t"@#$%^&*\\-_=+a-zA-Z,。\n《》、?:;“”‘’{}【】()…¥!—┄-]+'

if not os.path.exists(csv_path):

Print (F'Please open {url} with the browser and download the file (if there is no automatic download, you can press the keyboard shortcut ctrl s to start the download in the browser.

os.exit()

df = (

pd.read_csv(

csv_path,

encoding='utf-8-sig')

.drop_duplicates()

.dropna()

.rename(columns={

document_column_name: 'text'

}))

#

df['cut'] = (

df['text']

.apply(lambda x: str(x))

.apply(lambda x: re.sub(pattern, ' ', x))

.apply(lambda x: " ".join(jieba.lcut(x)))

)

# Tf-idf

tf_idf_vectorizer = TfidfVectorizer()

tf_idf = tf_idf_vectorizer.fit_transform(df['cut'])In order to see more intuitive, I also converted this matrix into a form. The example code is as follows

import pandas as pd

import os

import re

import jieba

from sklearn.feature_extraction.text import TfidfVectorizer

# The URL below is a remote link of the CSV file. If you miss this file, you need to open this link with a browser

# Download it, then put it in the code running command, and the file name should be consistent with the following csv_path

url = 'https://raw.githubusercontents.com/Micro-sheep/Share/main/zhihu/answers.csv'

# Local CSV documentation path

csv_path = 'answers.csv'

# Column in the CSV file to be split

document_column_name = 'Answer content'

pattern = u'[\\s\\d,.<>/?:;\'\"[\\]{}()\\|~!\t"@#$%^&*\\-_=+a-zA-Z,。\n《》、?:;“”‘’{}【】()…¥!—┄-]+'

if not os.path.exists(csv_path):

Print (F'Please open {url} with the browser and download the file (if there is no automatic download, you can press the keyboard shortcut ctrl s to start the download in the browser.

os.exit()

df = (

pd.read_csv(

csv_path,

encoding='utf-8-sig')

.drop_duplicates()

.dropna()

.rename(columns={

document_column_name: 'text'

}))

#

df['cut'] = (

df['text']

.apply(lambda x: str(x))

.apply(lambda x: re.sub(pattern, ' ', x))

.apply(lambda x: " ".join(jieba.lcut(x)))

)

# Tf-idf

tf_idf_vectorizer = TfidfVectorizer()

tf_idf = tf_idf_vectorizer.fit_transform(df['cut'])

#The feature word list

feature_names = tf_idf_vectorizer.get_feature_names()

#The characteristic words TF-IDF matrix

matrix = tf_idf.toarray()

feature_names_df = pd.DataFrame(matrix,columns=feature_names)

print(feature_names_df)

feature_names_dfIf you run in the jober, it will be like this (some code is intercepted)

So, with such a matrix, how can we feed it to LDA to eat?

Oh, we have no LDA yet, so we have to make it first. Of course, we can use Sklearn to provide us with a good LDA model. The Python example code is as follows as follows

from sklearn.decomposition import LatentDirichletAllocation

# Specify the number of LDA topics

n_topics = 5

lda = LatentDirichletAllocation(

n_components=n_topics, max_iter=50,

learning_method='online',

learning_offset=50.,

random_state=0)

print(lda)Output as follows

LatentDirichletAllocation(learning_method='online', learning_offset=50.0,

max_iter=50, n_components=5, random_state=0)Well, it is a thing that I can't understand, but the problem is not big.

After constructing it, we have to feed it to the East, so that it can produce it, and the Python example code for him to feed him is as follows (the core code is the last sentence)

from sklearn.decomposition import LatentDirichletAllocation

import pandas as pd

import os

import re

import jieba

from sklearn.feature_extraction.text import TfidfVectorizer

# The URL below is a remote link of the CSV file. If you miss this file, you need to open this link with a browser

# Download it, then put it in the code running command, and the file name should be consistent with the following csv_path

url = 'https://raw.githubusercontents.com/Micro-sheep/Share/main/zhihu/answers.csv'

# Local CSV documentation path

csv_path = 'answers.csv'

# Column in the CSV file to be split

document_column_name = 'Answer content'

pattern = u'[\\s\\d,.<>/?:;\'\"[\\]{}()\\|~!\t"@#$%^&*\\-_=+a-zA-Z,。\n《》、?:;“”‘’{}【】()…¥!—┄-]+'

if not os.path.exists(csv_path):

Print (F'Please open {url} with the browser and download the file (if there is no automatic download, you can press the keyboard shortcut ctrl s to start the download in the browser.

os.exit()

df = (

pd.read_csv(

csv_path,

encoding='utf-8-sig')

.drop_duplicates()

.dropna()

.rename(columns={

document_column_name: 'text'

}))

#

df['cut'] = (

df['text']

.apply(lambda x: str(x))

.apply(lambda x: re.sub(pattern, ' ', x))

.apply(lambda x: " ".join(jieba.lcut(x)))

)

# Tf-idf

tf_idf_vectorizer = TfidfVectorizer()

tf_idf = tf_idf_vectorizer.fit_transform(df['cut'])

#The feature word list

feature_names = tf_idf_vectorizer.get_feature_names()

#The characteristic words TF-IDF matrix

matrix = tf_idf.toarray()

# Specify the number of LDA topics

n_topics = 5

lda = LatentDirichletAllocation(

n_components=n_topics, max_iter=50,

learning_method='online',

learning_offset=50.,

random_state=0)

# Core, the TF-IDF matrix generated by LDA

lda.fit(tf_idf)So, what output can we get after this?

Of course, there are many types of output, we only pay attention to the following two outputs

What is the probability of each theme of each theme of each theme?

In order to better describe these two outputs, I will define the two functions to deal with it so that it turns it into the DataFrame of Pandas for follow -up processing.

Python sample code is as follows

import numpy as np

from sklearn.feature_extraction.text import TfidfVectorizer

from sklearn.decomposition import LatentDirichletAllocation

import pandas as pd

def top_words_data_frame(model: LatentDirichletAllocation,

tf_idf_vectorizer: TfidfVectorizer,

n_top_words: int) -> pd.DataFrame:

'''

Seek the word n_top_words of each theme

Parameters

----------

Model: LatentDirichletallocation of Sklearn

TF_IDF_Vectorizer: TFIDFVectorizer of Sklearn

n_top_Words: Previous n_top_words a theme

Return

------

DataFrame: Including the distribution of theme words

'''

rows = []

feature_names = tf_idf_vectorizer.get_feature_names()

for topic in model.components_:

top_words = [feature_names[i]

for i in topic.argsort()[:-n_top_words - 1:-1]]

rows.append(top_words)

columns = [f'topic {i+1}' for i in range(n_top_words)]

df = pd.DataFrame(rows, columns=columns)

return df

def predict_to_data_frame(model: LatentDirichletAllocation, X: np.ndarray) -> pd.DataFrame:

'''

Find the probability distribution of document themes

Parameters

----------

Model: LatentDirichletallocation of Sklearn

X: Word directional matrix

Return

------

DataFrame: Including the distribution of theme words

'''

# Find the theme probability distribution matrix of the given document

matrix = model.transform(X)

columns = [f'P(topic {i+1})' for i in range(len(model.components_))]

df = pd.DataFrame(matrix, columns=columns)

return dfOf course, it is difficult for you to directly look at the above code. It is difficult to know what it is doing. In order to allow you to better understand what this is, I will attach you the official document link of Sklearn.https://scikit-learn.org/stable/modules/generated/sklearn.decomposition.LatentDirichletAllocation.htmlIf you don’t understand English, you can translate it with a browser

At the same time, there are screenshots

With the above explanation, you may be easier to understand why the function is written like that. Of course, it doesn't matter if you don't understand it. It really needs a certain Python knowledge accumulation. If you skip this part, it will not hinder your goal of using LDA for the theme extraction.

Below I will combine the above steps to show you the use of LDA for theme extraction, and generate the python code of the theme probability distribution of the documentation and the theme distribution of the CSV file.

The complete code for the LDA theme of the specified file (irreversible)

import numpy as np

from sklearn.feature_extraction.text import TfidfVectorizer

from sklearn.decomposition import LatentDirichletAllocation

import pandas as pd

import jieba

import re

import os

# Local CSV file can be a local file or a remote file

source_csv_path = 'answers.csv'

# The text of the text in the text CSV file, note that you must fill in it here, otherwise you will report an error!

document_column_name = 'Answer content'

# Output the file path of the topic

top_words_csv_path = 'top-topic-words.csv'

# Output file path of the theme of each documentation

predict_topic_csv_path = 'document-distribution.csv'

# M html file path

html_path = 'document-lda-visualization.html'

# Selected theme number

n_topics = 5

# The number of theme words of the first n_top_words of each theme to the output

n_top_words = 20

# Remove the regular expression of meaningless characters

pattern = u'[\\s\\d,.<>/?:;\'\"[\\]{}()\\|~!\t"@#$%^&*\\-_=+a-zA-Z,。\n《》、?:;“”‘’{}【】()…¥!—┄-]+'

url = 'https://raw.githubusercontents.com/Micro-sheep/Share/main/zhihu/answers.csv'

# Column in the CSV file to be split

document_column_name = 'Answer content'

pattern = u'[\\s\\d,.<>/?:;\'\"[\\]{}()\\|~!\t"@#$%^&*\\-_=+a-zA-Z,。\n《》、?:;“”‘’{}【】()…¥!—┄-]+'

def top_words_data_frame(model: LatentDirichletAllocation,

tf_idf_vectorizer: TfidfVectorizer,

n_top_words: int) -> pd.DataFrame:

'''

Seek the word n_top_words of each theme

Parameters

----------

Model: LatentDirichletallocation of Sklearn

TF_IDF_Vectorizer: TFIDFVectorizer of Sklearn

n_top_Words: Previous n_top_words a theme

Return

------

DataFrame: Including the distribution of theme words

'''

rows = []

feature_names = tf_idf_vectorizer.get_feature_names()

for topic in model.components_:

top_words = [feature_names[i]

for i in topic.argsort()[:-n_top_words - 1:-1]]

rows.append(top_words)

columns = [f'topic {i+1}' for i in range(n_top_words)]

df = pd.DataFrame(rows, columns=columns)

return df

def predict_to_data_frame(model: LatentDirichletAllocation, X: np.ndarray) -> pd.DataFrame:

'''

Find the probability distribution of document themes

Parameters

----------

Model: LatentDirichletallocation of Sklearn

X: Word directional matrix

Return

------

DataFrame: Including the distribution of theme words

'''

matrix = model.transform(X)

columns = [f'P(topic {i+1})' for i in range(len(model.components_))]

df = pd.DataFrame(matrix, columns=columns)

return df

if not os.path.exists(source_csv_path):

Print (F'Please open {url} with the browser and download the file (if there is no automatic download, you can press the keyboard shortcut ctrl s to start the download in the browser.

os.exit()

df = (

pd.read_csv(

source_csv_path,

encoding='utf-8-sig')

.drop_duplicates()

.dropna()

.rename(columns={

document_column_name: 'text'

}))

#

df['cut'] = (

df['text']

.apply(lambda x: str(x))

.apply(lambda x: re.sub(pattern, ' ', x))

.apply(lambda x: " ".join(jieba.lcut(x)))

)

# Tf-idf

tf_idf_vectorizer = TfidfVectorizer()

tf_idf = tf_idf_vectorizer.fit_transform(df['cut'])

lda = LatentDirichletAllocation(

n_components=n_topics,

max_iter=50,

learning_method='online',

learning_offset=50,

random_state=0)

# Use TF_IDF Craid Training LDA model

lda.fit(tf_idf)

# Calculation n_top_words theme

top_words_df = top_words_data_frame(lda, tf_idf_vectorizer, n_top_words)

# Save n_top_words topic words to CSV files

top_words_df.to_csv(top_words_csv_path, encoding='utf-8-sig', index=None)

# Turn tf_idf as an array so that it can be used to calculate the probability distribution of the text theme later

X = tf_idf.toarray()

#An calculation, the topic probability distribution situation

predict_df = predict_to_data_frame(lda, X)

# Save text theme probability distribution into CSV files

predict_df.to_csv(predict_topic_csv_path, encoding='utf-8-sig', index=None)Run the above code, you will get two new CSV files, they are probably like this

The above is to complete the theme of the Chinese documentation of LDA

At the beginning, we said that the goal of this article is to make the LDA theme extraction and visualization. Yesterday we have successfully achieved it. Below we will use the Pyldavis library to dynamically visualize the theme we have drawn (follow the previous one in front The dynamic effect displayed by Zhang GIF is the same)

There are a few lines of specific implementation code, I do n’t talk about how to write it in detail. I also read the document to operate. Below is the final code and annotation, including theme extraction and visualization

For the complete code of LDA theme extraction and visualization of the specified file

import pyLDAvis.sklearn

import pyLDAvis

import numpy as np

from sklearn.feature_extraction.text import TfidfVectorizer

from sklearn.decomposition import LatentDirichletAllocation

import pandas as pd

import jieba

import re

import os

# Local CSV file can be a local file or a remote file

source_csv_path = 'answers.csv'

# The text of the text in the text CSV file, note that you must fill in it here, otherwise you will report an error!

document_column_name = 'Answer content'

# Output the file path of the topic

top_words_csv_path = 'top-topic-words.csv'

# Output file path of the theme of each documentation

predict_topic_csv_path = 'document-distribution.csv'

# M html file path

html_path = 'document-lda-visualization.html'

# Selected theme number

n_topics = 5

# The number of theme words of the first n_top_words of each theme to the output

n_top_words = 20

# Remove the regular expression of meaningless characters

pattern = u'[\\s\\d,.<>/?:;\'\"[\\]{}()\\|~!\t"@#$%^&*\\-_=+a-zA-Z,。\n《》、?:;“”‘’{}【】()…¥!—┄-]+'

url = 'https://raw.githubusercontents.com/Micro-sheep/Share/main/zhihu/answers.csv'

# Column in the CSV file to be split

document_column_name = 'Answer content'

pattern = u'[\\s\\d,.<>/?:;\'\"[\\]{}()\\|~!\t"@#$%^&*\\-_=+a-zA-Z,。\n《》、?:;“”‘’{}【】()…¥!—┄-]+'

def top_words_data_frame(model: LatentDirichletAllocation,

tf_idf_vectorizer: TfidfVectorizer,

n_top_words: int) -> pd.DataFrame:

'''

Seek the word n_top_words of each theme

Parameters

----------

Model: LatentDirichletallocation of Sklearn

TF_IDF_Vectorizer: TFIDFVectorizer of Sklearn

n_top_Words: Previous n_top_words a theme

Return

------

DataFrame: Including the distribution of theme words

'''

rows = []

feature_names = tf_idf_vectorizer.get_feature_names()

for topic in model.components_:

top_words = [feature_names[i]

for i in topic.argsort()[:-n_top_words - 1:-1]]

rows.append(top_words)

columns = [f'topic {i+1}' for i in range(n_top_words)]

df = pd.DataFrame(rows, columns=columns)

return df

def predict_to_data_frame(model: LatentDirichletAllocation, X: np.ndarray) -> pd.DataFrame:

'''

Find the probability distribution of document themes

Parameters

----------

Model: LatentDirichletallocation of Sklearn

X: Word directional matrix

Return

------

DataFrame: Including the distribution of theme words

'''

matrix = model.transform(X)

columns = [f'P(topic word {i+1})' for i in range(len(model.components_))]

df = pd.DataFrame(matrix, columns=columns)

return df

if not os.path.exists(source_csv_path):

Print (F'Please open {url} with the browser and download the file (if there is no automatic download, you can press the keyboard shortcut ctrl s to start the download in the browser.

os.exit()

df = (

pd.read_csv(

source_csv_path,

encoding='utf-8-sig')

.drop_duplicates()

.dropna()

.rename(columns={

document_column_name: 'text'

}))

#

df['cut'] = (

df['text']

.apply(lambda x: str(x))

.apply(lambda x: re.sub(pattern, ' ', x))

.apply(lambda x: " ".join(jieba.lcut(x)))

)

# Tf-idf

tf_idf_vectorizer = TfidfVectorizer()

tf_idf = tf_idf_vectorizer.fit_transform(df['cut'])

lda = LatentDirichletAllocation(

n_components=n_topics,

max_iter=50,

learning_method='online',

learning_offset=50,

random_state=0)

# Use TF_IDF Craid Training LDA model

lda.fit(tf_idf)

# Calculation n_top_words theme

top_words_df = top_words_data_frame(lda, tf_idf_vectorizer, n_top_words)

# Save n_top_words topic words to CSV files

top_words_df.to_csv(top_words_csv_path, encoding='utf-8-sig', index=None)

# Turn tf_idf as an array so that it can be used to calculate the probability distribution of the text theme later

X = tf_idf.toarray()

#An calculation, the topic probability distribution situation

predict_df = predict_to_data_frame(lda, X)

# Save text theme probability distribution into CSV files

predict_df.to_csv(predict_topic_csv_path, encoding='utf-8-sig', index=None)

# Use pyldavis for visualization

data = pyLDAvis.sklearn.prepare(lda, tf_idf, tf_idf_vectorizer)

pyLDAvis.save_html(data, html_path)

# #

os.system('clear')

# Browser open the html file to view the visualization results

os.system(f'start {html_path}')

Print ('This is generated this file:',

top_words_csv_path,

predict_topic_csv_path,

html_path)Of course, if you did not download the CSV file I mentioned earlier, the code above will end in advance, and you better turn to the CSV file I mentioned earlier.

After running the above code, 2 CSV files and 1 HTML file will be generated. Among them, HTML will automatically open with the browser by default. If it is not automatically opened, you need to open it with your browser to view the visual effect!

The following is the ultimate code of this article, and the most common code in the full text

General LDA theme extraction and visualization complete code

import pyLDAvis.sklearn

import pyLDAvis

import numpy as np

from sklearn.feature_extraction.text import TfidfVectorizer

from sklearn.decomposition import LatentDirichletAllocation

import pandas as pd

import jieba

import re

import os

# To make LDA's text CSV file, it can be a local file or a remote file. It must be guaranteed that it exists! Intersection Intersection Intersection

source_csv_path = 'answers.csv'

# The text of the text in the text CSV file, note that you must fill in it here, otherwise you will report an error! Intersection Intersection

document_column_name = 'Answer content'

# Output the file path of the topic

top_words_csv_path = 'top-topic-words.csv'

# Output file path of the theme of each documentation

predict_topic_csv_path = 'document-distribution.csv'

# M html file path

html_path = 'document-lda-visualization.html'

# Selected theme number

n_topics = 5

# The number of theme words of the first n_top_words of each theme to the output

n_top_words = 20

# Remove the regular expression of meaningless characters

pattern = u'[\\s\\d,.<>/?:;\'\"[\\]{}()\\|~!\t"@#$%^&*\\-_=+a-zA-Z,。\n《》、?:;“”‘’{}【】()…¥!—┄-]+'

def top_words_data_frame(model: LatentDirichletAllocation,

tf_idf_vectorizer: TfidfVectorizer,

n_top_words: int) -> pd.DataFrame:

'''

Seek the word n_top_words of each theme

Parameters

----------

Model: LatentDirichletallocation of Sklearn

TF_IDF_Vectorizer: TFIDFVectorizer of Sklearn

n_top_Words: Previous n_top_words a theme

Return

------

DataFrame: Including the distribution of theme words

'''

rows = []

feature_names = tf_idf_vectorizer.get_feature_names()

for topic in model.components_:

top_words = [feature_names[i]

for i in topic.argsort()[:-n_top_words - 1:-1]]

rows.append(top_words)

columns = [f'topic word {i+1}' for i in range(n_top_words)]

df = pd.DataFrame(rows, columns=columns)

return df

def predict_to_data_frame(model: LatentDirichletAllocation, X: np.ndarray) -> pd.DataFrame:

'''

Find the probability distribution of document themes

Parameters

----------

Model: LatentDirichletallocation of Sklearn

X: Word directional matrix

Return

------

DataFrame: Including the distribution of theme words

'''

matrix = model.transform(X)

columns = [f'P(topic {i+1})' for i in range(len(model.components_))]

df = pd.DataFrame(matrix, columns=columns)

return df

df = (

pd.read_csv(

source_csv_path,

encoding='utf-8-sig')

.drop_duplicates()

.dropna()

.rename(columns={

document_column_name: 'text'

}))

# Set the gatling word collection

stop_words_set = set (['you', 'me'])

#

df['cut'] = (

df['text']

.apply(lambda x: str(x))

.apply(lambda x: re.sub(pattern, ' ', x))

.apply(lambda x: " ".join([word for word in jieba.lcut(x) if word not in stop_words_set]))

)

# Tf-idf

tf_idf_vectorizer = TfidfVectorizer()

tf_idf = tf_idf_vectorizer.fit_transform(df['cut'])

lda = LatentDirichletAllocation(

n_components=n_topics,

max_iter=50,

learning_method='online',

learning_offset=50,

random_state=0)

# Use TF_IDF Craid Training LDA model

lda.fit(tf_idf)

# Calculation n_top_words theme

top_words_df = top_words_data_frame(lda, tf_idf_vectorizer, n_top_words)

# Save n_top_words topic words to CSV files

top_words_df.to_csv(top_words_csv_path, encoding='utf-8-sig', index=None)

# Turn tf_idf as an array so that it can be used to calculate the probability distribution of the text theme later

X = tf_idf.toarray()

#An calculation, the topic probability distribution situation

predict_df = predict_to_data_frame(lda, X)

# Save text theme probability distribution into CSV files

predict_df.to_csv(predict_topic_csv_path, encoding='utf-8-sig', index=None)

# Use pyldavis for visualization

data = pyLDAvis.sklearn.prepare(lda, tf_idf, tf_idf_vectorizer)

pyLDAvis.save_html(data, html_path)

# #

os.system('clear')

# Browser open the html file to view the visualization results

os.system(f'start {html_path}')

Print ('This is generated this file:',

top_words_csv_path,

predict_topic_csv_path,

html_path)

If you want to use other files for LDA theme extraction and visualization, you need to pay attention to the above code

# Text CSV File, it can be a local file or a remote file

source_csv_path = 'answers.csv'

# The text of the text in the text CSV file, note that you must fill in it here, otherwise you will report an error!

document_column_name = 'Answer content'

# Output the file path of the topic

top_words_csv_path = 'top-topic-words.csv'

# Output file path of the theme of each documentation

predict_topic_csv_path = 'document-distribution.csv'

# M html file path

html_path = 'document-lda-visualization.html'

# Selected theme number

n_topics = 5

# The number of theme words of the first n_top_words of each theme to the output

n_top_words = 20Just modify it according to the comment!

If the file you use is not a .csv file, but .xlsx or .xls files, then put in the code

df = (

pd.read_csv(

source_csv_path,

encoding='utf-8-sig')

.drop_duplicates()

.dropna()

.rename(columns={

document_column_name: 'text'

}))Change to

df = (

pd.read_excel(

".xlsx or .xlsx file path"))

.drop_duplicates()

.dropna()

.rename(columns={

document_column_name: 'text'

}))For example (Windows system)

df = (

pd.read_excel(

"C:/Users/me/data/data.xlsx")

.drop_duplicates()

.dropna()

.rename(columns={

document_column_name: 'text'

}))For example (Linux system)

df = (

pd.read_excel(

"/home/me/data/data.xlsx")

.drop_duplicates()

.dropna()

.rename(columns={

document_column_name: 'text'

}))At the same time, remember to use PIP to install XLRD and OpenPyxl.

At the end

This article shows you in detail how to do the LDA theme extraction and visualization. If you read it carefully, the harvest will be relatively large. Finally, I wish you all progress every day! Intersection If you encounter difficulties at the beginning of learning, you want to find a Python to learn the exchange environment, you can join us, receive learning materials, and discuss together

Intelligent Recommendation

Can't Python data visualization? Teach you to use Matplotlib to achieve data visualization (treasure version)

introduce In the process of using machine learning methods to solve problems, you will definitely encounter scenes that need to be drawn for data. Matplotlib is an open source drawing gallery that sup...

NLP basics (Excel reading and writing, keyword extraction, theme model LDA)

NLP basic knowledge of Excel reading and writing, keyword extraction, theme model LDA First, write Excel with openpyxl method Second, keyword extraction Third, LDA Fourth, pattern matching This articl...

NLP theme extraction Topic LDA code practice GENSIM package code

NLP theme extraction Topic LDA code practice GENSIM package code Original work, please indicate the source: [Mr.Scofield ] From RxNLP. Share a code practice: Practice a typical task ...

[LDA] LDA theme model

LDA is a probability generation model. It is believed that the document is generated by the words in the bag of words with a certain probability. For each document in the corpus, the generation proces...

Python data analysis: teach you how to use Pandas to generate visualization charts

As everyone knows, Matplotlib is the originator of many Python visualization packages and the most commonly used standard visualization library for Python. Its functions are very powerful and complex,...

More Recommendation

[Python | Miscellaneous Code] Teach you to use pyecharts to realize data visualization analysis

1.Module installation and introduction ###installation pip install pyecharts ###Introduction pyecharts is a class library for generating Echarts charts. Echarts is a data visualization JS library open...

LDA theme model English complete python code

reference...

Introduction to LDA theme model and Python implementation

1. Introduction to LDA theme model The LDA theme model is mainly used to speculate the theme distribution of the document. It can concentrate the theme of each document in the form of probability dist...

Jieba word and LDA subject extraction (Python)

First, environmental configuration Before running the word, you must install Python correctly. I am installing Python3.9, but it is recommended to install a low version, such as Python3.8, because som...

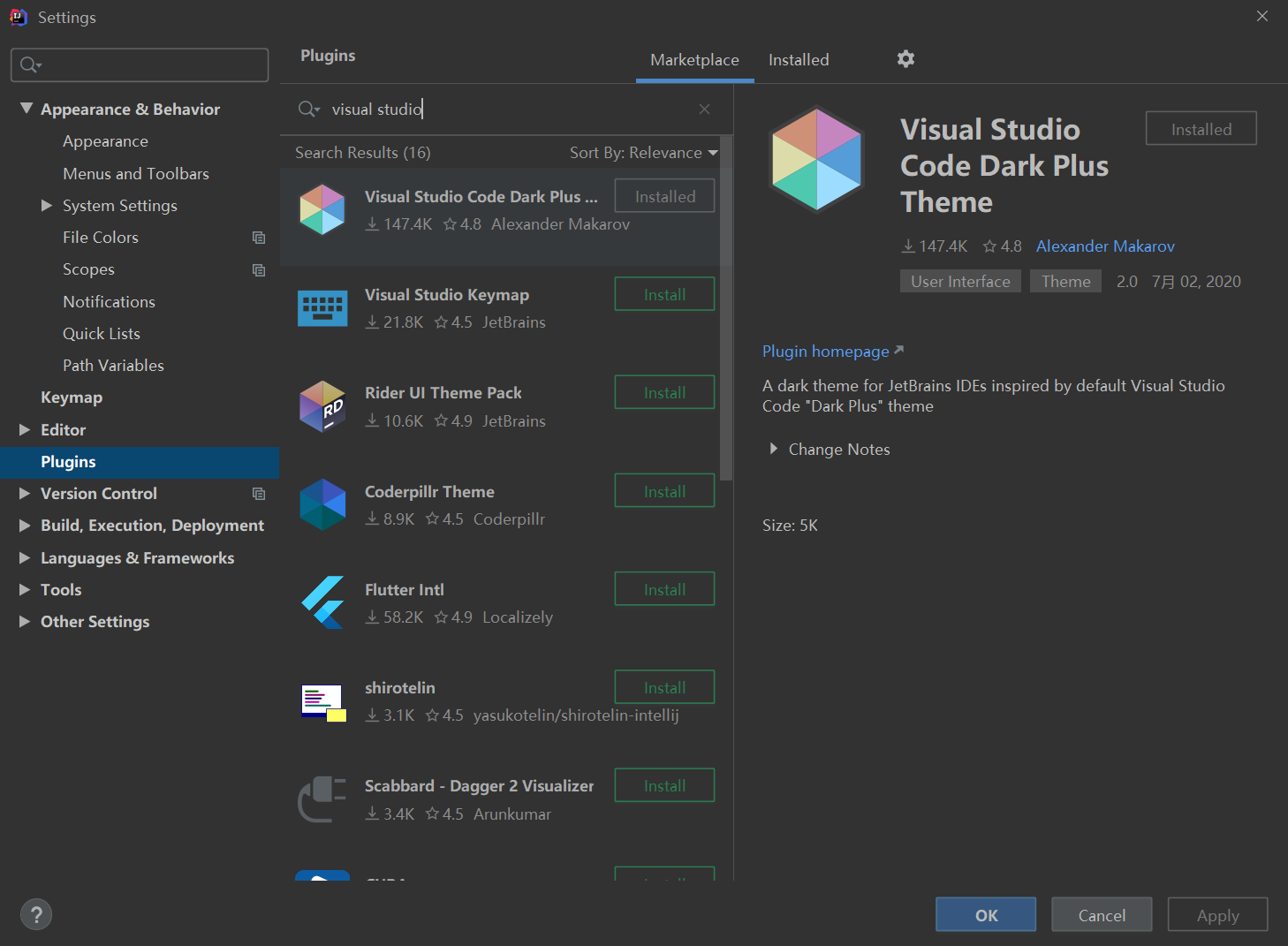

Teach you how to use vscode's theme skin + shortcut keys in idea

Friends who often use vscode as a development tool will feel very uncomfortable when using idea for the first time, because the skins and shortcut keys of the two tools are too different. Today I will...