8 Service fault tolerance-Resilience4j

tags: Spring Cloud java

Service fault tolerance and avalanche effect

Summary of existing architecture

Consul->Service discovery and configuration management

Ribbon->Load balancing

Feign->Code elegance

Chapter Goals

High concurrency-"Service fault tolerance?

Avalanche effect

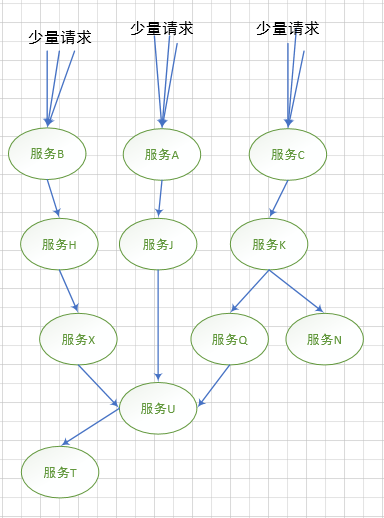

For example, there are three microservices C->B->A. At some point, microservice A crashes in a high concurrency scenario, then microservice B's request for A will wait. In java, each request is a thread , Service waiting, will cause the thread to block, and will not be released until the timeout. In the case of high concurrency, micro service B will continue to create new threads until the resources are exhausted, and finally cause micro service B to crash. Similarly, micro Service C will also crash, causing services in the entire call chain to crash.

We call the phenomenon of cascading failures caused by basic service failures the avalanche effect

Also known as "cascading failure", cascading failure, cascading failure

Five solutions for service fault tolerance

-

Timeout -> assign a longest time to each request. If the time is exceeded, release the thread

-

Current limit -> Only high concurrency will block a large number of threads. In the case of a large number of stress tests, setting the maximum number of threads can also prevent excessive threads from causing resource exhaustion

-

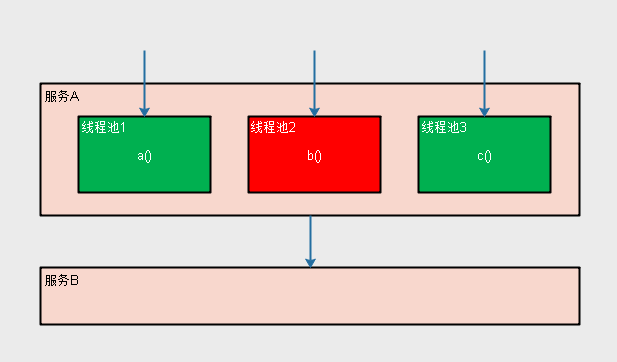

Silo mode -> Titanic The cabin is separated from the cabin by steel plate welding. Therefore, the water in one cabin will not cause the sinking of the ship. The silo mode in the software uses an independent thread pool for each service and does not affect each other.

-

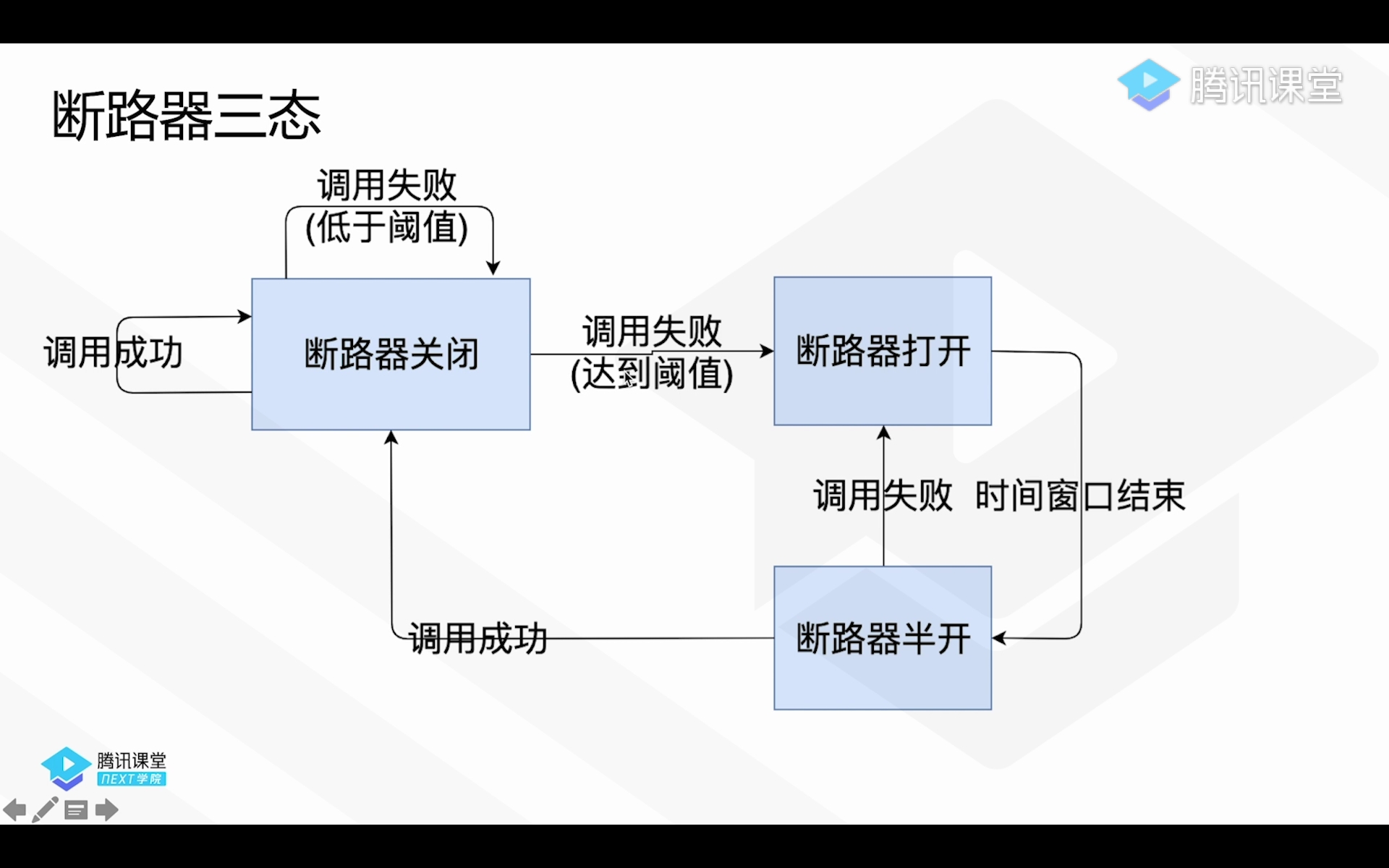

Circuit breaker mode -> When a service call error rate reaches 50%, the number of errors is 20, then the circuit breaker mode is activated

close half open open slide window

-

Retry -> Not designed to protect yourself, but designed for fault tolerance

Mainly thinking

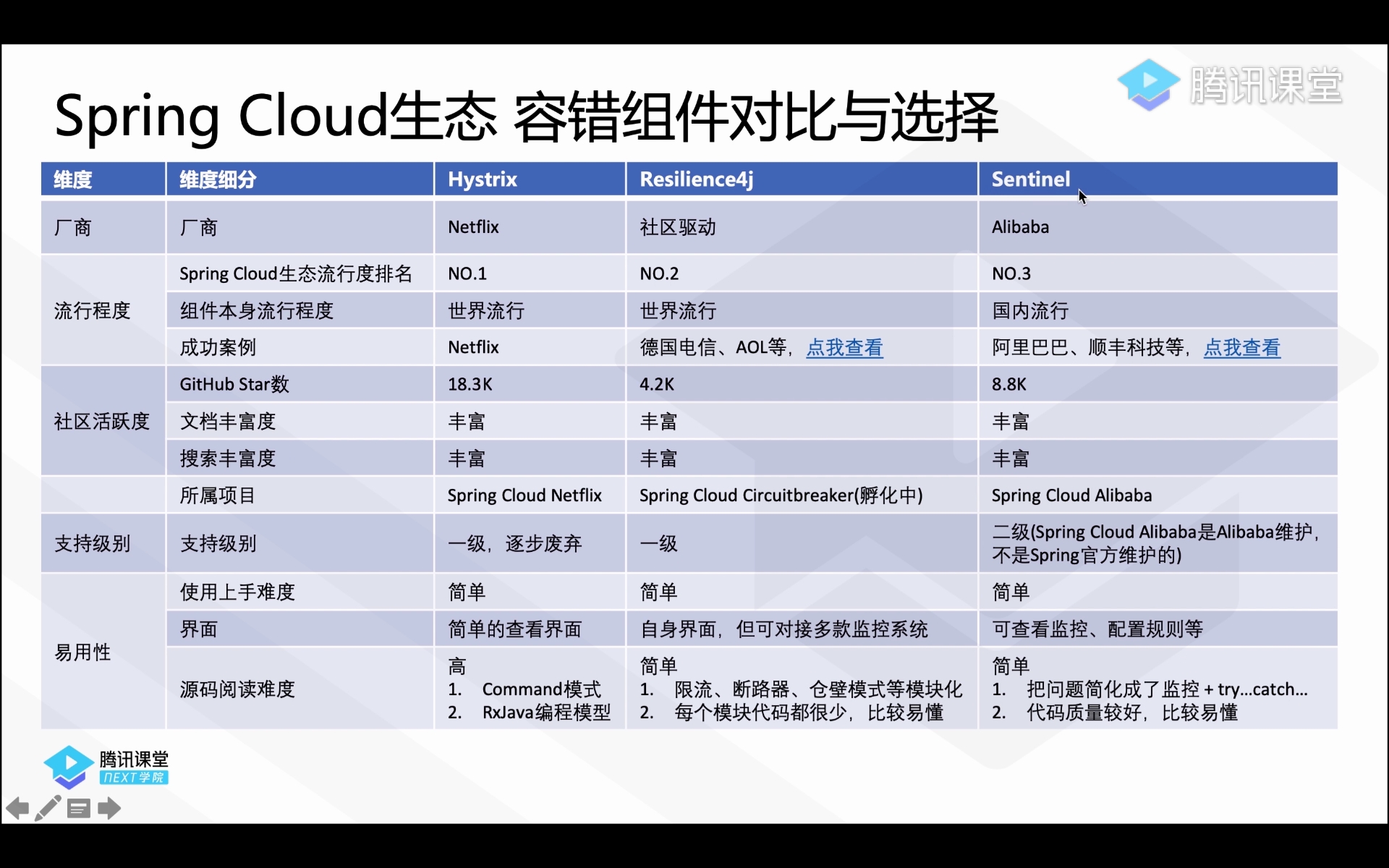

Comparison and selection of Spring Cloud ecological fault-tolerant components

Using Resilience4j protection to achieve fault-tolerance-current limiting

Resilience4J is a lightweight fault-tolerant framework inspired by Hystrix from Netflix

https://resilience4j.readme.io/

https://github.com/resilience4j/resilience4j

Official example:

https://github.com/resilience4j/resilience4j-spring-cloud2-demo

-

Introduction of dependent pom in course microservices

io.github.resilience4j resilience4j-spring-cloud2 1.1.0 -

Add annotation @RateLimiter(name = "lessonController")

You can add annotations on the guidance class or method, and then add annotations on the method

package com.cloud.msclass.controller;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.web.bind.annotation.GetMapping;

import org.springframework.web.bind.annotation.PathVariable;

import org.springframework.web.bind.annotation.RequestMapping;

import org.springframework.web.bind.annotation.RestController;

import com.cloud.msclass.domain.entity.Lesson;

import com.cloud.msclass.service.LessonService;

import io.github.resilience4j.ratelimiter.annotation.RateLimiter;

@RestController

@RequestMapping("lesssons")

public class LessonController {

@Autowired

private LessonService lessonService;

/**

* http://localhost:8010/lesssons/buy/1

* Purchase courses with specified id

* @param id

*/

@GetMapping("/buy/{id}")

@RateLimiter(name = "buyById")

public Lesson buyById(@PathVariable Integer id) {

return this.lessonService.buyById(id);

}

}

-

Add configuration

resilience4j:

ratelimiter:

instances:

# This must be the same as the name in the annotation, otherwise it will not work

buyById:

# The maximum frequency of requests in the refresh cycle

limit-for-period: 1

# Refresh cycle

limit-refresh-period: 1s

# Thread waits for permission time Thread throws exception without waiting

timeout-duration: 0

The above configuration: You can only request related services once in 1s http://localhost:8010/lesssons/buy/1

The startup log contains the following information

2020-02-24 16:22:53.204 INFO 16700 --- [ main] i.g.r.utils.RxJava2OnClasspathCondition : RxJava2 related Aspect extensions are not activated, because RxJava2 is not on the classpath.

2020-02-24 16:22:53.206 INFO 16700 --- [ main] i.g.r.utils.ReactorOnClasspathCondition : Reactor related Aspect extensions are not activated because Resilience4j Reactor module is not on the classpath.

It can be solved by introducing the following modules, but it will not affect the use if it is not introduced.

<dependency>

<groupId>io.github.resilience4j</groupId>

<artifactId>resilience4j-rxjava2</artifactId>

<version>1.2.0</version>

</dependency>

<dependency>

<groupId>io.reactivex.rxjava2</groupId>

<artifactId>rxjava</artifactId>

</dependency>

<dependency>

<groupId>io.github.resilience4j</groupId>

<artifactId>resilience4j-reactor</artifactId>

</dependency>

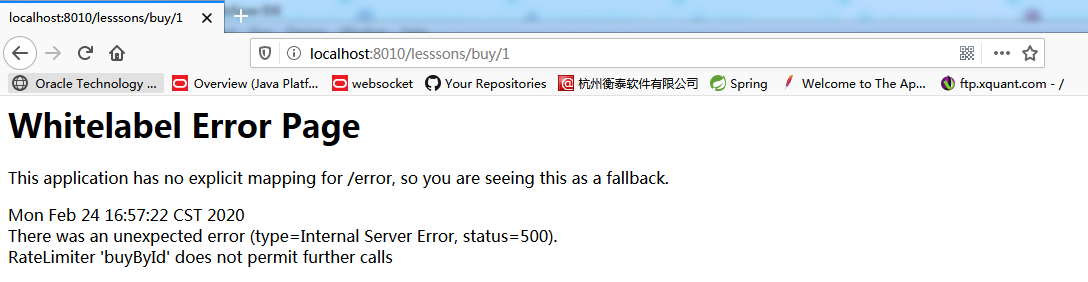

The following error will occur when you refresh the page quickly:

io.github.resilience4j.ratelimiter.RequestNotPermitted: RateLimiter ‘buyById’ does not permit further calls

However, I don’t want such an error page to appear when the current limit is triggered, but to adopt some other strategies, add the fallbackMethod attribute definition in the @RateLimiter annotation

package com.cloud.msclass.controller;

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.web.bind.annotation.GetMapping;

import org.springframework.web.bind.annotation.PathVariable;

import org.springframework.web.bind.annotation.RequestMapping;

import org.springframework.web.bind.annotation.RestController;

import com.cloud.msclass.domain.entity.Lesson;

import com.cloud.msclass.service.LessonService;

import io.github.resilience4j.ratelimiter.annotation.RateLimiter;

@RestController

@RequestMapping("lesssons")

public class LessonController {

private static final Logger logger = LoggerFactory.getLogger(LessonController.class);

@Autowired

private LessonService lessonService;

/**

* http://localhost:8010/lesssons/buy/1 to purchase a course with specified id

*

* @param id

*/

@GetMapping("/buy/{id}")

@RateLimiter(name = "buyById", fallbackMethod = "buyByIdFallBack")

public Lesson buyById(@PathVariable Integer id) {

return this.lessonService.buyById(id);

}

// Must have the same return value and parameters as the original method (Houman takes a Throwable parameter)

public Lesson buyByIdFallBack(@PathVariable Integer id, Throwable throwable) {

// means get from local cache

logger.error("fallback", throwable);

return new Lesson();

}

}

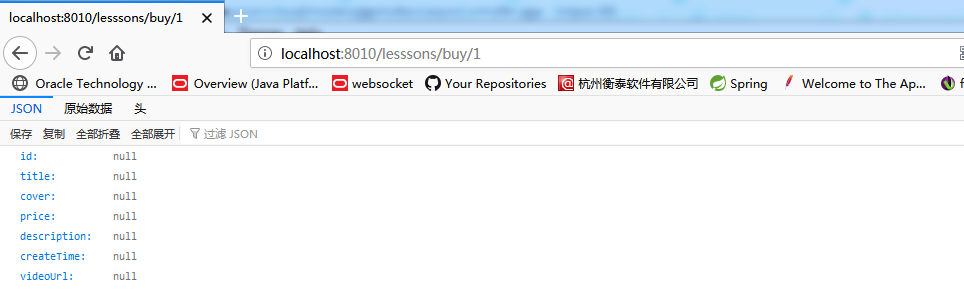

If the current limit is triggered at this time, the following result is returned:

Resilicence44j current limit implementation

- Leaky bucket algorithm

- Token bucket algorithm

io.github.resilience4j.ratelimiter.internal.AtomicRateLimiter default based on token bucket algorithm

io.github.resilience4j.ratelimiter.internal.SemaphoreBasedRateLimiter is based on the Semaphore class

Using Resilience4j protection to achieve fault-tolerance-warehouse wall mode

@GetMapping("/buy/{id}")

// @RateLimiter(name = "buyById", fallbackMethod = "buyByIdFallBack")

@Bulkhead(name = "buyById", fallbackMethod = "buyByIdFallBack")

public Lesson buyById(@PathVariable Integer id) {

return this.lessonService.buyById(id);

}

resilience4j:

ratelimiter:

instances:

buyById:

# The maximum frequency of requests in the refresh period

limit-for-period: 1

# Refresh cycle

limit-refresh-period: 1s

# Thread waits for permission time Thread throws exception without waiting

timeout-duration: 0

bulkhead:

instances:

buyById:

# Maximum number of concurrent requests

max-concurrent-calls: 3

# The maximum waiting time when the warehouse wall is saturated Default 0

# max-wait-duration: 10ms

# Event buffer size

# event-consumer-buffer-size: 1

Two ways of implementation:

Semaphore: each request to get the semaphore, if not obtained, the request is rejected

ThreadPool: Every request to get the thread. If it is not obtained, it will enter the waiting queue. After the queue is full, execute the rejection policy.

From a performance perspective, Semaphore-based is better than thread pool-based. If it is based on thread pool, it may lead to too many small isolated thread pools, which will lead to too many threads of the entire microservice, and too many threads will cause too many thread context switches.

By default, it is based on Semaphore. If you want to use ThreadPool-based mode, set it as follows:

@Bulkhead(name = "buyById", fallbackMethod = "buyByIdFallBack", type = Type.THREADPOOL)

resilience4j:

thread-pool-bulkhead:

instances:

buyById:

# Maximum thread pool size

max-thread-pool-size: 1

# Core threads

core-thread-pool-size: 1

# Queue capacity default 100

queue-capacity: 1

# When the number of threads is greater than the number of cores, the maximum time for excess idle threads to wait for trustless default 20ms

keep-alive-duration: 20ms

# Event buffer size

event-consumer-buffer-size: 100

// Remarks

java.lang.IllegalStateException: ThreadPool bulkhead is only applicable for completable futures

io.github.resilience4j.bulkhead.internal.SemaphoreBulkhead

io.github.resilience4j.bulkhead.internal.FixedThreadPoolBulkhead

Using Resilience4j protection to achieve fault-tolerant circuit breaker mode

/**

* http://localhost:8010/lesssons/buy/1 to purchase a course with specified id

*

* @param id

*/

@GetMapping("/buy/{id}")

// @RateLimiter(name = "buyById", fallbackMethod = "buyByIdFallBack")

// @Bulkhead(name = "buyById", fallbackMethod = "buyByIdFallBack")

@CircuitBreaker(name = "buyById", fallbackMethod = "buyByIdFallBack")

public Lesson buyById(@PathVariable Integer id) {

return this.lessonService.buyById(id);

}

io.github.resilience4j.circuitbreaker.internal.CircuitBreakerStateMachine implementation based on finite state machine

Use Resilience4j protection to achieve fault tolerance-retry

@Retry(name = "buyById", fallbackMethod = "buyByIdFallBack")

io.github.resilience4j.retry.internal.RetryImpl

Resilience4j configuration management

-

Configuration visualization

package com.cloud.msclass.controller;

import java.util.List;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.cloud.client.ServiceInstance;

import org.springframework.cloud.client.discovery.DiscoveryClient;

import org.springframework.web.bind.annotation.GetMapping;

import org.springframework.web.bind.annotation.RestController;import io.github.resilience4j.ratelimiter.RateLimiter;

import io.github.resilience4j.ratelimiter.RateLimiterRegistry;

import io.vavr.collection.Seq;@RestController

public class TestController {@Autowired private DiscoveryClient discoveryClient; @GetMapping("/test-discovery") public List<ServiceInstance> testDiscovery() { // Go to Consul to query all forces of the specified microservice return discoveryClient.getInstances("ms-user"); } @Autowired private RateLimiterRegistry rateLimiterRegistry; /* * http://localhost:8010/rate-limiter-configs */ @GetMapping("/rate-limiter-configs") public Seq<RateLimiter> testRateLimiter() { return this.rateLimiterRegistry.getAllRateLimiters(); }}

Make a request http://localhost:8010/rate-limiter-configs

{"empty":false,"traversableAgain":true,"lazy":false,"async":false,"sequential":true,"ordered":false,"singleValued":false,"distinct":false,"orNull":{"name":"buyById","tags":{"empty":true,"lazy":false,"async":false,"traversableAgain":true,"distinct":true,"ordered":false,"singleValued":false,"sequential":false,"orNull":null,"memoized":false},"eventPublisher":{},"rateLimiterConfig":{"timeoutDuration":0.0,"limitRefreshPeriod":1.000000000,"limitForPeriod":1,"writableStackTraceEnabled":true},"metrics":{"numberOfWaitingThreads":0,"availablePermissions":1,"nanosToWait":0,"cycle":684},"detailedMetrics":{"numberOfWaitingThreads":0,"availablePermissions":1,"nanosToWait":0,"cycle":684}},"memoized":false}

- default allocation

The default settings can be added through the following configuration

Note: For RateLimiter, the configuration named default is valid if and only if the specified RateLimiter does not have any custom configuration

resilience4j:

ratelimiter:

configs:

default:

# The maximum frequency of requests in the refresh period

limit-for-period: 1

# Refresh cycle

limit-refresh-period: 1s

# Thread waits for permission time Thread throws exception without waiting

timeout-duration: 0

- Configuration refresh

Can save configuration to consul for management

Annotation coordination use and execution order

In actual projects, the above four current-limiting modes may be mixed. If multiple annotations are used together, what is the order of action?

Check the io.github.resilience4j.ratelimiter.configure.RateLimiterAspect class and find that this class implements the Ordered interface. The corresponding methods are:

@Override

public int getOrder() {

return properties.getRateLimiterAspectOrder();

}

private int rateLimiterAspectOrder = Ordered.LOWEST_PRECEDENCE - 1;

int LOWEST_PRECEDENCE = Integer.MAX_VALUE;

The order in which all annotations can be obtained through a similar method is as follows:

BulkHead(LOWEST_PRECEDENCE)

RateLimiter(LOWEST_PRECEDENCE - 1)

CircuitBreaker(LOWEST_PRECEDENCE - 2)

Retry(LOWEST_PRECEDENCE - 3)

If you are not satisfied with the above order, you can customize the order (bulk does not meet the custom order):

resilience4j:

retry:

retry-aspect-order: 1

circuitbreaker:

circuit-breaker-aspect-order: 2

ratelimiter:

rate-limiter-aspect-order: 3

Feign works with Resilience4j

Add the Resilience4j annotation to the corresponding interface of feign

Summary of this chapter

-

Common ideas of service fault tolerance

-

Common gameplay and related strategies

-

monitor

Intelligent Recommendation

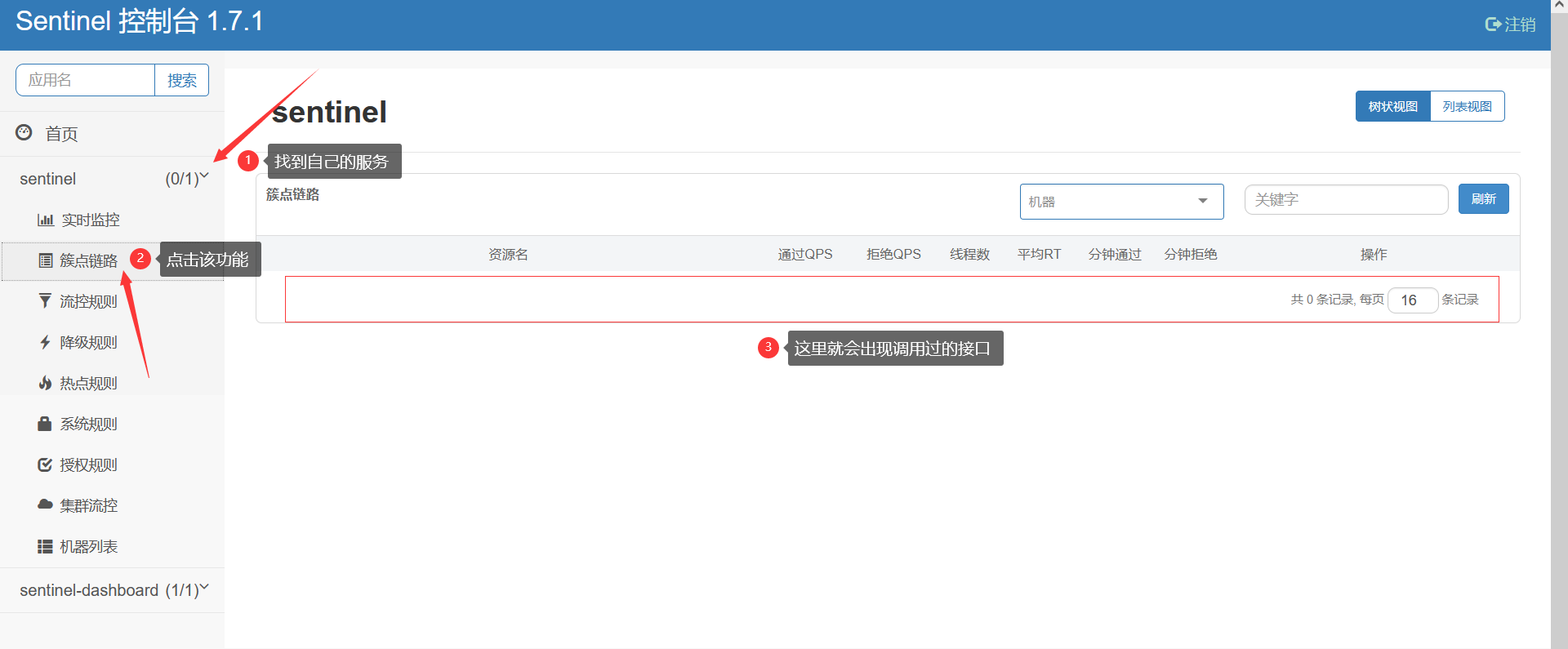

SENTINEL of the service fault tolerance

Background of service fault tolerance In distributed systems, each service is independent. They communicate through the network protocol between them, but in this architecture, the service access over...

Service Fault Tolerance Overview

background: In a distributed system, due to the increase in the number of services, network reasons, high concurrent dependency failures, etc., services cannot guarantee 100% availability. Once a serv...

Service fault tolerance, sentinel

Server fault tolerance Case Solution 1. Timeout: The service instance of the caller, when the service calls, the response is not obtained for a period of time, the request is over. 2. Flow limit: prov...

Detailed Service Fault Tolerance

This article explains what is service fault tolerance Article directory service avalanche effect Common Fault Tolerance Schemes Common Fault Tolerant Components service avalanche effect Before talking...

Analysis of rpcx service framework 8-cluster fault tolerance mechanism

The RPCX Distributed Services Framework focuses on providing high performance and transparent RPC remote service calls. Cluster fault tolerance mechanism Failover Mode Failed to automatically switch, ...

More Recommendation

(54)Part14-Sentinel Service Fault Tolerance-02-Service Fault Tolerance

1. Fault-tolerant solution To prevent the spread of avalanches, we must do a good job of fault tolerance: some measures to protect ourselves from being dragged down by pig teammates. Common fault-tole...

Service Fault Tolerance Protection Hystrix

effect ProtectionThe overall availability of the service prevents the spread of faults caused by calls between services. Features serviceDowngrade, service blown, thread and...

Micro-service fault tolerance means

An introduction If the service provider response is very slow, then the consumer's request to the provider will be forced to wait until the provider response or timeout. At high load scenarios, if not...

SpringCloudHystrix service fault tolerance protection

Article Directory 1 Introduction 2. Quick start 3. Process analysis Write in front This article refers to the Spring Cloud microservices combat book from the program DD. This article is used as a read...

Hystrix service fault tolerance protection

1. What is the catastrophic avalanche effect? The reasons for the catastrophic avalanche effect can be summarized into the following three types: The service provider is unavailab...